Hi, I am a fifth-year Ph.D. candidate in Computer Science at UCSB. I am advised by Prof. Tao Yang and co-advised by Prof. Xifeng Yan. I previously worked with Prof. William Yang Wang, who also serves on my Ph.D. committee. I am affiliated with UCSB Search Systems Lab and UCSB NLP group. During my Ph.D., I did summer internships at Meta GenAI, AWS AI Labs, Apple MLR, and Microsoft Research. Before joining UCSB, I worked as a research scientist at NAVER and received my B.S. and M.S. from Seoul National University.

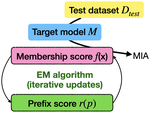

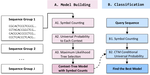

My research mainly focuses on improving the efficiency and reliability of language models through advanced algorithms for retrieval ( MacRAG, DensePhrases-UL-HN, and PKM-augmented PLMs), adaptive computation ( AcuRank, Length-Adaptive Transformer and Dynamic-TinyBERT), data augmentation ( SSMix and VAT-D), and generation ( Subword LM QAC and SOM-DST). Other than that, I did research on multi-modal learning ( Textual KD SLU, ST-BERT), and LLM evaluations ( EM-MIA and ScholarBench). Recently, I am interested in efficient LLM inference techniques (e.g., KV cache compression, speculative decoding, early-exit), long-context modeling, retrievals (e.g., retrieval-augmented generation, multi-granularity retrieval, reranking), inference scaling, and LLM post-training.

News:

- [Jan 2026] 📄 PPA-Plan was posted on arXiv.

- [Jan 2026] 📄 EM-MIA was accepted at EACL 2026.

- [Dec 2025] Attended NeurIPS 2025 in San Diego. 🌴

- [Sep 2025] Finished a research internship at Meta GenAI.

- [Sep 2025] 📄 AcuRank was accepted at NeurIPS 2025.

- [Sep 2025] Passed Ph.D. qualifying exam and advanced to candidacy. ✨

- [Aug 2025] 📄 ScholarBench was accepted at Findings of EMNLP 2025.

- [May 2025] 📄 MacRAG was posted on arXiv.

Load more

- [Sep 2024] Finished a research internship at AWS AI Labs.

- [Sep 2023] Finished a research internship at Apple MLR.

- [May 2023] A paper on 📄 Korean GEC was accepted at ACL 2023.

- [Oct 2022] A paper on 📄 Dense Phrase Retrieval was accepted at Findings of EMNLP 2022.

- [Sep 2022] Finished a research internship at Microsoft Research.

- [Apr 2022] 📄 VAT-D was accepted at NAACL 2022.

- [Nov 2021] Won the Best Paper Award at the SustaiNLP Workshop at EMNLP 2021.

- [Oct 2021] 📄 Dynamic-TinyBERT was accepted at the ENLSP Workshop at NeurIPS 2021.

- [Sep 2021] Started Ph.D. studies at UCSB CS. 🚀

- [May 2021] 📄 Length-Adaptive Transformer and 📄 SSMix were accepted at ACL 2021 as main conference and Findings papers, respectively.

Interests

- Efficiency

- Retrievals

- Large Language Models

- Long-context Modeling

Education

-

Ph.D. in Computer Science, Sep 2021 - Present

University of California, Santa Barbara

-

M.S. in Electrical and Computer Engineering, Mar 2015 - Aug 2017

Seoul National University

-

B.S. in Electrical and Computer Engineering / Mathematical Sciences (double major), Mar 2010 - Aug 2014

Seoul National University

-

High School (early graduation), Mar 2008 - Feb 2010

Seoul Science High School